Detecting Anomalous Online Reviewers an Unsupervised Approach Using Mixture Models

Abstract

Security devices produce huge number of logs which are far beyond the processing speed of human being beings. This newspaper introduces an unsupervised approach to detecting dissonant beliefs in large scale security logs. Nosotros propose a novel feature extracting mechanism and could precisely characterize the features of malicious behaviors. We design a LSTM-based anomaly detection approach and could successfully identify attacks on two widely-used datasets. Our approach outperforms three pop anomaly detection algorithms, one-course SVM, GMM and Principal Components Analysis, in terms of accuracy and efficiency.

Introduction

The running state of the organisation is normally recorded in a log file, used for debugging and error detection, therefore the log information is a valuable resources for anomaly detection. Log data is natural time serial data, contents and types of events recorded past the log file also tend to be stable. Except for some highly covert apt attacks, most of the attacks are not instantaneous and have a fixed pattern, the log data will produce a blueprint when recording malicious behaviors, which provides the possibility to discover anomaly from the log sequence.

The traditional methods rely on the administrator to manually analyze the log text. This kind of processes pb to a large number of human power costs, and requires the system administrator to understand the network environment and be proficient in organisation architecture.

However, in order to avoid tracking by the security administrator, the logs generated by attacks are getting similar to the logs generated past the normal access behaviors. In add-on, because of the big variety of applications and services, each web node will generate a large number of logs, which results in the log data file becoming extremely large. It may not be possible to directly procedure these logs manually. These logs may contain signals of malicious behaviors, so it is necessary to apply some anomaly detection methods for assay.

The existing automatic methods of bibelot detection based on log information can be divided into two categories: supervised learning methods relying on tags, such as conclusion tree, LR, SVM and unsupervised learning methods based on PCA, clustering and invariant mining. Supervised learning has a very good outcome in detecting known malicious behavior or aberrant state, merely it cannot observe unknown attacks, as it depends on prior knowledge. Unsupervised method tin can be used to notice unknown exceptions, but nigh of the methods need to better the accuracy.

A exists inquiry introduced use concept of the longest common subsequence to reduce the number of matching patterns obtained during the calculation [i], enabling simple classification of logs. Besides, Xu et al. uses clan rules, which are generated past trust scores, to mine frequent detail sets, then detects attack behavior based on the association rules [2]. Zhao et al. used a method based on grapheme matching to written report the classification of organization log, and determined the correspondence between log type and character [3]. Seker. Due south.East et al. took the occurrence frequency of letters as key elements to recognize log sequence, abstracting different types of logs into different characters, and selects adaptive thousand-value co-ordinate to their characteristics [4]. The existing enquiry shows that at that place is a strong correlation between logs and their character composition.

This model is based on LSTM sequence mining, through information-driven anomaly detection method, it can larn the sequence pattern of normal log, and detect unknown malicious behaviors, place crimson team attacks in a big number of log sequences. The model performs character-level analysis of the log text straight, then in that location is no need to perform excessive log processing, such as log classification, matching, etc., which greatly simplifies the calculation complication.

However, there are still some problems in the current model. For case, it only performs character level analysis, which may ignore some high-level features. A natural thought is to clarify the logs hierarchically, but enquiry founds that this measure has non improved its performance [v], thus the relevant methods demand further study. In addition, since no log correlation matching is performed, only abnormal log lines tin be alerted, and the anomaly level of each user cannot be detected directly. A possible solution is to trace users through log entries that are determined to be abnormal, merely plain it is slightly verbose.

Contribution

Through articulation efforts, subsequently discussing the experimental together, Wenhao ZHOU completed the modification of LSTM code and preparation of the model, Jiuyao ZHANG completed the comparative experiments, and Ziqi YUAN completed the writing of the article.

The first section of this paper introduces the groundwork, the goal of the model and the existing problems. The second section introduces the methods used in the model, including information processing methods, the specific structure of the LSTM network, and the adjustment of parameters. The 3rd section compares this model with the methods in [5, half dozen] and illustrates the advantages. The fourth section introduces the experiment, including a detailed clarification of the data set, exam indicators, and comparison results compared with other methods such as 1-class SVM, GMM and PCA.

Bibelot Detection Approach

Our approach learns character-level behaviors for normal logs, processing a stream of log-lines as follows:

- (ane)

Initialize weights randomly

- (2)

Train the model with log data of the first n days

- (3)

For each day k (k > n) in chronological order, firstly based on model Mthousand − 1, which is trained by with log data of the showtime g-one days, produce bibelot scores for all events in solar day k. Secondly, tape per-user-outcome anomaly scores in rank club to analysts for inspection. Thirdly, update model Mk − 1 to Chiliadk, as logs in day k are used.

Log-Line Tokenization

The network log cloud be obtained from many sources. The logs obtained from different sources are naturally generated in different formats and record different information. In club to expand the application scope of the model as much as possible, we consider each line of the logs as a cord direct, and take the character-level information as the input of LSTM directly.

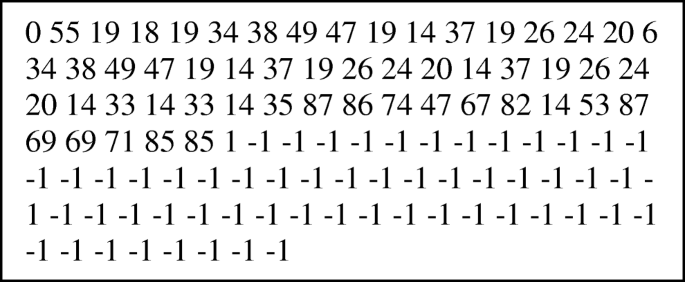

Since only printable characters are considered, whose range is from 0 × 20 to 0x7e, at that place are 95 characters in full. Convert each word into its corresponding ASCII and subtract xxx, so we become 0 for the beginning and 1 for the terminate. Then fill up the space with −ane to ensure the same length of each sequence for training. An example is shown in Fig. ane.

Instance of processed features of LSTM model.

LSTM Model

In order to calculate the anomaly score of each tape, we consider a calculation model for a unmarried log line. RNN is a common tool to deal with that kind of information. Specifically, we use an LSTM network, whose input is the characters of each log line, so as to predict the probability distribution of the next character and infer the abnormal possibility of this log line, or the user account.

Bidirectional Event Style (BEM)

For a log line with a character length of K, let x(t) be the character in position t, and h(t) exist the hidden representation of the corresponding position of the character. According to the relevant theory of LSTM [7], we have the post-obit relations

$$ \boldsymbol{h}(t)=\boldsymbol{o}(t)\cdotp \mathit{\tanh}\left(\boldsymbol{c}(t)\correct) $$

(two.1)

$$ \boldsymbol{c}(t)=\boldsymbol{f}(t)\cdotp \boldsymbol{c}\left(t-i\right)+\boldsymbol{i}(t)\cdotp \boldsymbol{g}(t) $$

(2.two)

$$ \boldsymbol{thou}(t)=\mathit{\tanh}\left(x(t)\boldsymbol{W}\left(grand,10\right)+\boldsymbol{h}\left(t-1\right)\boldsymbol{W}\left(g,h\right)\correct) $$

(ii.iii)

$$ \boldsymbol{f}(t)=\upsigma \left(ten(t)\boldsymbol{W}\left(f,x\correct)+\boldsymbol{h}\left(t-1\right)\boldsymbol{Due west}\left(f,h\right)+\boldsymbol{b}(f)\right) $$

(2.4)

$$ \boldsymbol{i}(t)=\upsigma \left(x(t)\boldsymbol{W}\left(i,x\right)+\boldsymbol{h}\left(t-1\right)\boldsymbol{W}\left(i,h\right)+\boldsymbol{b}(i)\correct) $$

(ii.5)

$$ \boldsymbol{o}(t)=\upsigma \left(x(t)\boldsymbol{W}\left(o,10\right)+\boldsymbol{h}\left(t-one\correct)\boldsymbol{W}\left(o,h\right)+\boldsymbol{b}(o)\right) $$

(2.6)

Among them, initial state h(0) and initial cell state c(0)are preset as zilch vectors, bullet symbol denotes element-wise multiplication, and sigma represents logistic part with standard parameters, that is

$$ \sigma (10)=\frac{one}{1+{e}^{-10}} $$

(2.7)

Vector yard represents the hidden value inferred from the current input and the previous hidden country. Vectors f, i, o are the standard forgetting gate, input gate and output gate in the LSTM model. Matrix W and departure vector b are the parameters used in the model.

In addition, since we tin can become all the contents of a log line directly, and the characters in a certain position in a log line are obviously related to their context content, we build a bidirectional LSTM, or BiLSTM, which infers the character probability distribution in each position from the back to the front meanwhile. For this reason, we add a new set of hidden vectors h b (K + 1), h b (K)… h b (1), and then the model could run the LSTM equations in opposite at the same fourth dimension. The superscript b of reverse LSTM parameters shows its parameter matrix W.

The subconscious value h is used to predict the characters in the new position, and the upshot is p, specifically, nosotros take

$$ \boldsymbol{p}(t)= softmax\left(\boldsymbol{h}\left(t-1\right)\boldsymbol{W}(p)+{\boldsymbol{h}}^b\left(t+1\right){\boldsymbol{W}}^b(p)+\boldsymbol{b}(p)\correct) $$

(2.8)

Compared with the ordinary one-way LSTM, it is obvious that the subconscious value h and matrix Westward of the reverse LSTM are added to the prediction role, which enables the states to predict the character in a sure position through the positive and negative directions at the aforementioned time.

Finally, the cantankerous-entropy loss is defined as

$$ \frac{1}{One thousand}\sum \limits_{t=1}^KH\left(x(t),\boldsymbol{p}(t)\correct) $$

(two.9)

to update the weights. Nosotros railroad train this model using stochastic mini-batch (non-truncated) dorsum-propagation through fourth dimension.

Related Work

The almost relevant works are [5, vi].

Among them, [5] constructs a double-layer LSTM model for Knet2016 dataset. Its lower layer is equanimous of a LSTM network. On this basis, the subconscious country of each token can be used as the feature of this line to get the type of log-line, and a LSTM model is constructed to complete the prediction of the next log-line type. However, the experimental results show that the double-layer structure does non amend the prediction functioning, its metrics are lower than the simple unmarried-layer model.

Due to the limitation of computing power, we accept to used less information, then nosotros did not build a double-layer LSTM model, only used a unmarried-layer BiLSTM model, through which the characters that may appear can be predicted bidirectionally at the aforementioned time. On the 1 manus, this ensures the detection outcome to a certain extent, on the other hand, it reduces the demand for computing power directly.

[half dozen] discusses the value and potential problems of many logs in particular, and gives a new, comprehensive, real network security data overview. This newspaper enumerates some kinds of logs and counts discussion frequency and other information, but does not give a detailed and feasible processing method. Each kind of log has dissimilar format and reserved words. Fifty-fifty if we tin make a detailed assay, it is hard to observe a mutual and rapid method to make exception detection for unlike kinds of logs.

Therefore, information technology is necessary for us to behave character level analysis and prediction, which can directly avert the slap-up differences between different logs, and because the normal log-line and anomaly log-line are obviously different in character level, information technology is guaranteed that this kind of method is right.

Experimental Results

Data

We used the Los Alamos National Laboratory (LANL) cyber security dataset (Kent 2016), which collected event logs of LANL'south internal computer network for 58 consecutive days, with more than 1 billion log lines, including authentication, network traffic and other records. Fields involving privacy have been bearding. Also normal network activities, 30 days of reddish team attacks were recorded.

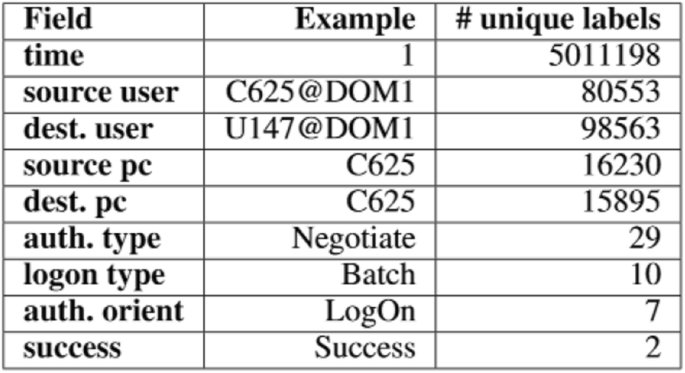

[5] gives some statistical descriptions, and the parts we use, which are the hallmark consequence logs, are shown Table i. Nosotros used 3,028,187 loglines in twenty days, the first 14 days for preparation, and the rest half-dozen days for testing, with 51 dissonant loglines and 26 anomalous user-days.

Afterwards tokenization, the length of all log lines is filled to 112.

We also used insider thread exam dataset (R6.2), which is a collection of synthetic insider thread examination datasets that provide both background and malicious actor constructed data. It covers device interaction, e-postal service, file organization and other aspects of the log content. We cull device access log and cyberspace access log to use in the experiments. The fields and statistics of net access log are summarized in Tabular array 2a, while those of device access log are shown in Table 2b.

We used one,676,485 loglines in 21 days, the first fourteen days for training, and the rest vii days for testing, with 530 anomalous loglines and 530 anomalous user-days.

In the process of tokenization, it is plant that length of the loglines of net access dataset is upwards to 2500 characters. If all the lines are filled to such a long sequence, information technology will undoubtedly cost a very big amount of computation. Therefore, the URL column only extracts domain name, and the introduction column of the page extracts its key phrases through rake algorithm [viii]. Finally, the length of the sequences is determined to be 830.

Timescale

For this work, we consider the following timescales.

Kickoff of all, each line of the log records a relatively independent action, for case, a user makes an identity authentication, and the result is success or failure, and so line is regarded as a set of contained input. We call this timescale logline level. Every bit its name, logline level analysis will calculate the anomaly score of each line.

Secondly, in lodge to compare with the baseline experiments, we integrate all the actions recorded by the log of each user in each day, the anomaly score of each logline of a user in each solar day is aggregated to calculate the anomaly score for the user in that solar day. As nosotros use the maximum anomaly score of a user, it is chosen user-day-max level. This level indicates the possibility of anomaly of a user in a specific day.

In addition, we use a normalization strategy. For the bibelot score of each line, information technology first subtracts the average exception score of the corresponding user on the aforementioned mean solar day, and and then exist calculated unremarkably, so as to realize the normalization of the original score. This normalized calculation method is known as unequal, so information technology is chosen user-mean solar day-diff level, marker the difference between maximum anomaly score and average score of a user in a specific day.

Metric

We utilize AUC every bit the evaluation metric, which represents the surface area under the receiver operator characteristic curve. It characterizes the merchandise off in model detection performance between truthful positives and simulated positives.

The AUC value will not be greater than ane,the higher the value, the better the performance of the model.

Baselines

According to [5], for the data nosotros used, nosotros define a multidimensional aggregate feature vector for each user, every bit the basis of comparative experiments.

We consider three baseline models, which are one form SVM, GMM and PCA.

Data Processing

In terms of data, nosotros first filter out the abnormal logs in the data set, keep just the normal logs, and have 70% as the training gear up; and so take all the remaining xxx% in the original information set as the examination set.

Specifically, the possibility of field value occurrence is divided into mutual and uncommon, the object is divided into single user and all users. Source PC proper name, target PC name, target user, process proper noun and the PC proper noun of the procedure are taken as the fundamental fields of statistical information. The time is divided into all twenty-four hour period, 0–6, 6–12, 12–18 and 18–24.

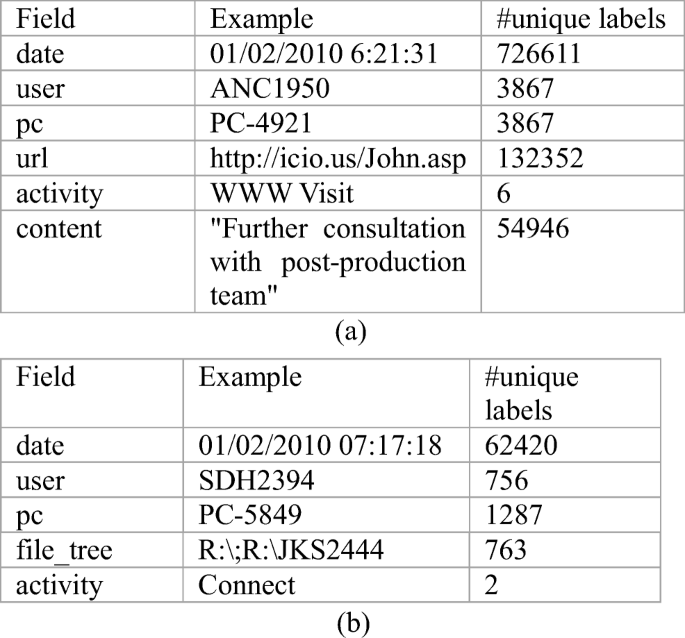

Make Cartesian production on the probability, object-oriented, key fields and fourth dimension to go 100 features of statistical information, and and then the fields that often appear in the log, such as login upshot, which is success or failure, are taken as the features to get 134-dimensional characteristic vector in the terminate.

The daily log of each user is summarized. If there are merely a few different values in a sure field, such equally login result, the frequency of these values will be counted. If there are a large number of different values in a sure field, such as PC proper noun, user name, etc., these values will exist divided into mutual or uncommon.

For a user, if the frequency of a value is less than v%, it will exist classified equally uncommon; otherwise, it volition exist classified every bit common. For all users, if the frequency of a value is less than the average in its field, it will be classified every bit uncommon; otherwise, it will be classified as common. The data of each dimension of the feature vector is counted to get the feature vector of each user every twenty-four hours.

An example is shown in Fig. 2.

Example of processed features of baselines.

- A.

primary components analysis

PCA is used to learn the position representation of the extracted characteristic vector, project the original data from the original infinite to the chief component space, and then reconstruct the projection to the original infinite. If only the first principal component is used for projection and reconstruction, for most data, the fault after reconstruction is small; but for outliers, the error after reconstruction is still relatively large.

- B.

one class SVM

In the detection of logs, there are only two categories: normal and abnormal, and the normal data is much more than than the other. Therefore, one class SVM can be used to classify the extracted features and complete the exception detection.

- C.

Gaussian Mixture Model

Gaussian Mixture Model (GMM) is ane of the most prevalent statistical approaches used to discover anomaly past using the Maximum Likelihood Estimates (MLE) method to perform the mean and variance estimates of Gaussian distribution [9]. Several Gaussian distributions are joint together to limited the extracted eigenvectors and find out the outliers.

Results and Analysis

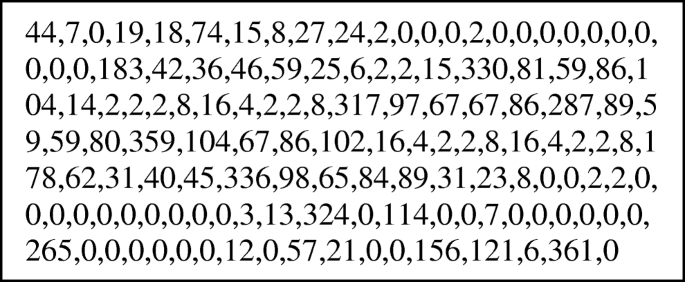

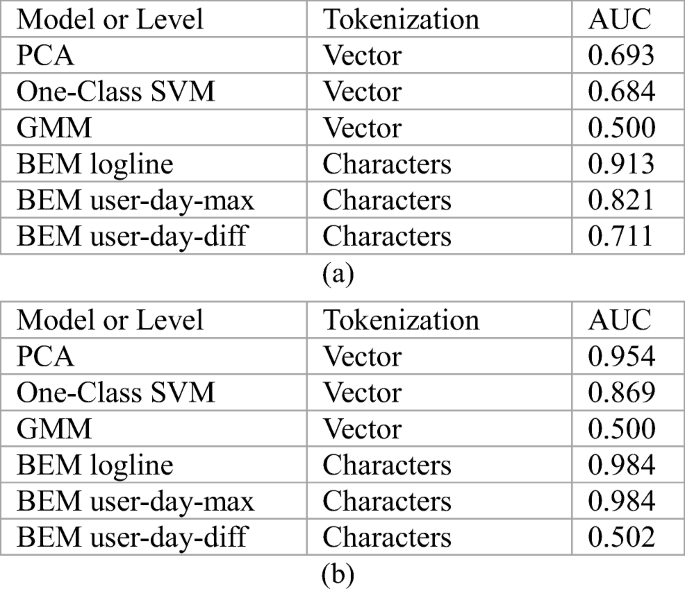

Tabular array 3a summarizes the detection operation on Knet2016 dataset, while the ROC curves are shown in Fig. 3.

ROC curves for dataset Knet2016.

Amongst all the methods, the all-time one is log-line level detection of BEM, and that of user-day level are also satisfactory. The feature vectors used past baselines can exist equivalent to user-twenty-four hours level detection, information technology is shown that operation of BEM model is improve than that of baselines.

For baselines, the used feature vectors determine their performance. At present, the vectors focus on the statistics of log generated by a user in a twenty-four hour period in different time periods [5]. If this statistical method can't directly reflect the design of anomalous logs, the baselines trained with these vectors volition non become satisfactory results.

For BEM, it has achieved much better operation at logline level than user-day level. A good performance in logline level is easy to achieve, because the number of normal logs is hundreds of thousands of times that of anomalous logs, a few faux positives will not make reduce the score also much. That is likewise the reason why the AUC score of logline level in [5] is then high.

In the calculation process at user-day level, we aggregate the bibelot score of each logline every bit the anomaly score of a user in a day. Proportion of normal and abnormal is reduced to several thou times after the aggregation, which makes it more hard to achieve college AUC score. Besides, information technology may exist the aggregation of bibelot scores which causes the functioning. A better calculating method of users' bibelot score needs to be explored.

Tabular array 5b summarizes the detection functioning on R6.two dataset, while the ROC curves are shown in Fig. 4. Notice that several curves are completely coincident. So we recognize the dataset used in this experiment is too pocket-size.

ROC curves for dataset R6.ii.

The result of user-day-diff method is not satisfactory. Ane of the reasons may exist that the proportion of normal and abnormal is as well small.

Compared with the results of [5], we also come to the conclusion that the effect of the model at logline level is amend than user-day level. Nonetheless, [v] did not find the significant difference between logline level and user-24-hour interval level when using the same data for training. This may be acquired by the big divergence in the corporeality of training data. Due to the limitation of calculating ability, nosotros simply use a minor role of the dataset to train the model, so only methods with lR6.2le demand for data can maintain the best outcome.

The two kinds of logs are also analyzed respectively, and the results are shown in Fig. 5. Information technology can be seen that the results of user-day-unequal method are not caused past the combination of the results of different logs.

Separate ROC curves for dataset R6.2: (a) Device data and (b) http data.

Conclusion

Based on the analysis of the logs content in datasets, we build an anomaly detection model based on LSTM. Processing the logs in Knet2016 and R6.2 datasets, preparation and testing the model on the extracted log-line text, the results bear witness that it can correctly discover exceptions. Trough contrast experiments we tin can say that its outcome is better than other models, including PCA, one form SVM and GMM.

However, the comparison between this model and other classical model still needs further testing. On 1 hand, training and testing should be carried out on datasets with wider sources and larger corporeality to obtain results which are more general; on the other hand, isolation forest should exist introduced equally a baseline for comparative experiments, because it is non as the best performing anomaly measurement algorithm in the contempo DARPA insider thread detection program [10].

For the BEM itself, the utilize of dataset however needs to be adapted. For each user in a mean solar day, a fixed number of logs could be selected for training to avoid oversized influence of whatever unmarried user. At present, there are only 64 cells in the LSTM layer, more cells could be used in the next step.

A improve calculation method of the anomalous score of a user needs to be explored. Anomalous loglines contain information of anomalous users, so a ameliorate integration method could ameliorate the detection effect of the model on user-day level.

Through theoretical analysis, nosotros surrender the structure of multi-layer model. This enables the model to work at a lower computational ability level. Withal, this does not hateful that multi-layer model has no significance.

[11] propose some other training and prediction method for log-line level, which also uses LSTM model. Through the drain approach proposed past [12], the log-line is parsed to obtain the category, which is used in sequence to predict the distribution of the next log-line category subsequently training by LSTM.

Plain, that kind of anomaly detection mode works in log-line level, which is different from the model in this paper. These two levels of prediction practice not interfere with each other, and so it can be considered to a certain extent. For instance, directly weight the results of the ii, or employ whatsoever resolution method which is different from relying on the hidden value of BiLSTM to extract log-line features to parse the log. In the training of log-line feature sequence, this training can also exist conducted through BiLSTM.

Due to the limitation of computing power, the datasets are non fully used, so the scale of the training data should exist expanded to obtain meliorate results. In addition, in R6.2 dataset, besides device access log and internet admission log, which are used, at that place are likewise logon log, file access log, etc. The comprehensive utilise of these logs will heighten the power to detect dissonant users, and especially useful for identifying ruby-red team users.

However, the comprehensive use of logs from dissimilar sources requires farther inquiry. Obviously, logs from dissimilar sources have unlike structures. Mixing these logs directly and preparation through character-level models may not yield a skillful result. A natural idea is to detect these logs separately, and and so integrate these anomaly scores after obtaining the anomaly scores of each user; another thought is to parse the logs to category lawmaking [12], sort these codes past time, and so utilise several models to make predictions on the sequence. Obviously, this method will lose part of the information.

In some sense, this is besides a kind of hierarchical construction. Whether calculating bibelot score of users past log category and and then integrate, or parsing by logline and so detect, it is to synthesize existing information at a sure level, and then use the comprehensive result to detect anomaly at a higher level.

All in all, the way of building hierarchical structure model is worth further study.

References

-

Y. Zhao, X. Wang, H. Xiao and Ten. Chi, Comeback of the Log Pattern Extracting Algorithm Using Text Similarity, 2018 IEEE International Parallel And Distributed Processing Symposium Workshops (IPDPSW), Vancouver, BC, 2018, pp. 507–514.

-

Xu, Grand. Y., Gong, X. R., & Cheng, M. C. (2016). Inspect log association rule mining based on improved Apriori algorithm. Computer Application, 36(7), 1847–1851.

-

Y. Zhao and H. Xiao, Extracting Log Patterns from Arrangement Logs in Large, 2016 IEEE international parallel and distributed processing symposium workshops (IPDPSW), Chicago, IL, 2016, pp. 1645–1652.

-

Seker, Southward. E., Altun, O., Ayan, U., & Mert, C. (2014). A novel string distance function based on Nearly frequent K characters. International Periodical of Auto Learning & Computing, 4(ii), 177–183.

-

Tuor A, Baerwolf R, Knowles N, et al. Recurrent neural network language models for open vocabulary event-level cyber bibelot detection. 2017.

-

Kent and Alexander D. Cyber security data sources for dynamic network inquiry, Dynamic Networks and Cyber-Security. 2016.

-

Hochreiter S. and Schmidhuber J.. Long Short-Term Memory, Neural computation 9(eight):1735–1780.

-

Rose S, Engel D, Cramer N, et al. Automatic keyword extraction from private documents, Text Mining: Applications and Theory. John Wiley & Sons, Ltd, 2010, Automatic Keyword Extraction from Private Documents.

-

W. Contributors. Maximum Likelihood Estimation, bachelor: https://en.wikipedia.org/w/index.php?championship=Maximum_likelihood_estimation&oldid=857905834, (2015).

-

Gavai, G., Sricharan, K., Gunning, D., Hanley, J., Singhal, M., & Rolleston, R. (2015). Supervised and unsupervised methods to detect insider threat from en-terprise social and online activity information. Journal Of Wireless Mobile Networks, Ubiquitous Computing, and Dependable Applications, 6(4), 47–63.

-

Du M, Li F, Zheng Thousand, et al. DeepLog: Anomaly Detection and Diagnosis from Organisation Logs through Deep Learning, Acm Sigsac Conference on Reckoner & Communications Security ACM, 2017.

-

He P, Zhu J, Zheng Z, et al. Drain: An online log parsing approach with fixed depth tree, 2017 IEEE international conference on web services (ICWS). IEEE, 2017.

Writer information

Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open up Admission This article is licensed under a Creative Commons Attribution 4.0 International License, which permits utilize, sharing, adaptation, distribution and reproduction in any medium or format, every bit long as you give appropriate credit to the original author(s) and the source, provide a link to the Artistic Eatables licence, and bespeak if changes were made. The images or other third party material in this article are included in the commodity's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article'southward Creative Commons licence and your intended use is non permitted by statutory regulation or exceeds the permitted apply, you volition demand to obtain permission straight from the copyright holder. To view a re-create of this licence, visit http://creativecommons.org/licenses/by/four.0/.

Reprints and Permissions

Virtually this article

Cite this article

Zhao, Z., Xu, C. & Li, B. A LSTM-Based Bibelot Detection Model for Log Analysis. J Sign Process Syst 93, 745–751 (2021). https://doi.org/10.1007/s11265-021-01644-4

-

Received:

-

Revised:

-

Accepted:

-

Published:

-

Issue Date:

-

DOI : https://doi.org/10.1007/s11265-021-01644-4

Keywords

- Anomaly detection

- Log analysis

Source: https://link.springer.com/article/10.1007/s11265-021-01644-4

0 Response to "Detecting Anomalous Online Reviewers an Unsupervised Approach Using Mixture Models"

Post a Comment